Ollama for Windows Official Preview Available

Last Update: Jun 7, 2024

I wrote a book! Check out A Quick Guide to Coding with AI.

Become a super programmer!

Learn how to use Generative AI coding tools as a force multiplier for your career.

If you’re a blog reader or follow me on social media, you already know I’m a huge fan of Ollama. It is an excellent platform for running Large Language Models locally. It’s easy to use and powerful. There are “easier” programs out there like LMStudio and GPT4All that are simpler to set up. However, Ollama gives you a lot more control and can be customized very nicely. You can be running models or even writing AI-powered applications in minutes.

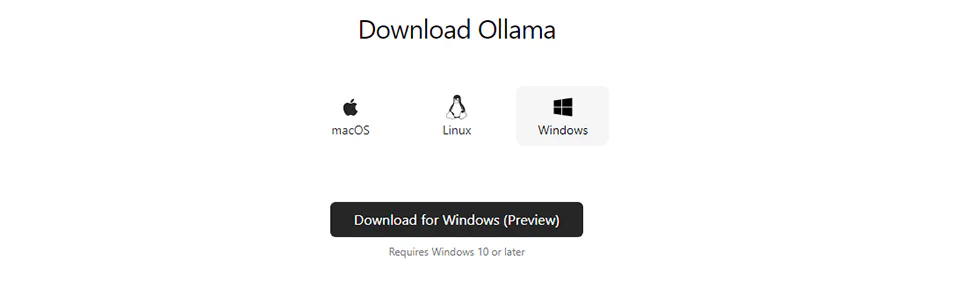

Anyway, Ollama has only been officially available for Mac and Linux. One solution was to run Ollama in WSL, which works well. But now they have an official Windows version.

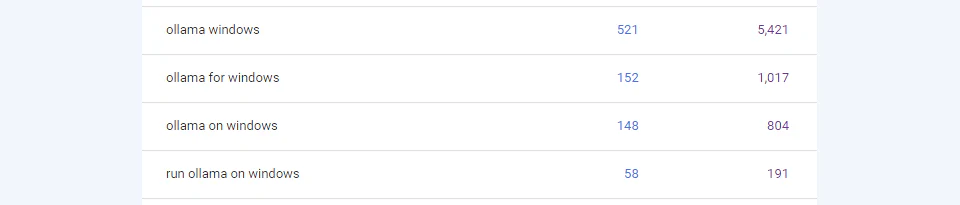

I noticed in my SEO logs that I have a few folks searching for “Ollama in Windows,” so I thought I’d drop this article here.

Let’s dig in and check it out.

Installing Ollama in Windows

Go to the Windows Download Page of the Ollama Website, and click Download for Windows:

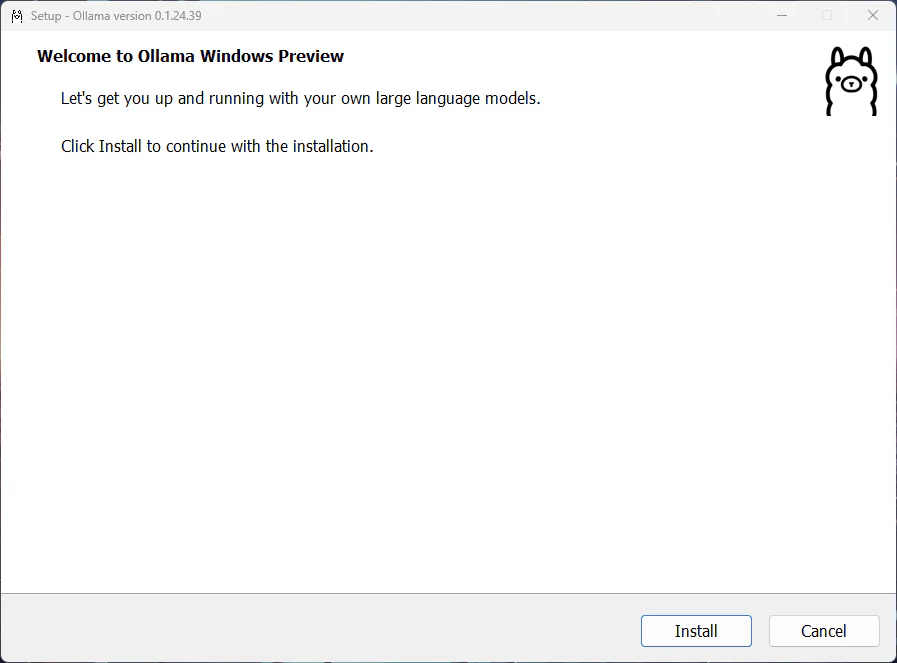

Run the executable, and you’ll see an installer window come up:

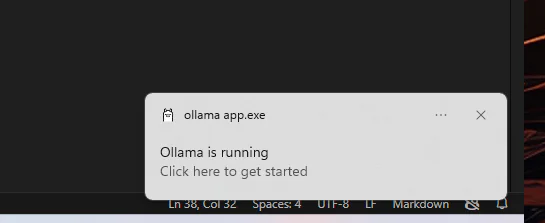

Click Install, and you’ll see a progress bar start, and then see this popup on your taskbar:

And now we have a cool little Ollama icon.

Which shows us “View Logs” and “Quit Ollama” as options.

Well, what now???

Using Ollama in Windows

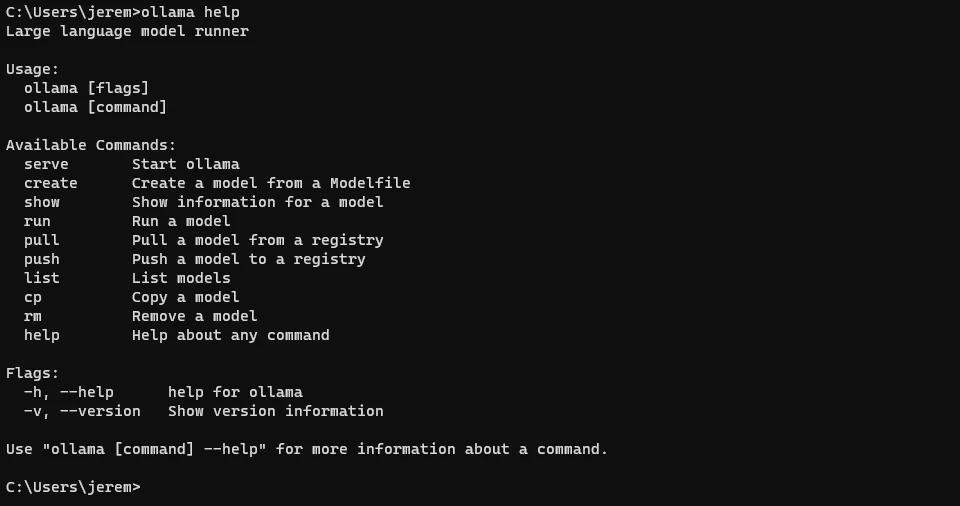

I assume that Ollama now runs from the command line in Windows, just like Mac and Linux. Sure enough, I opened a command prompt and typed ollama help.

And there it is. The familiar Ollama prompt I’ve come to love. So, go to the Ollama models page and grab a model. I think I’ll do the LLaVa model we looked at in my last article.

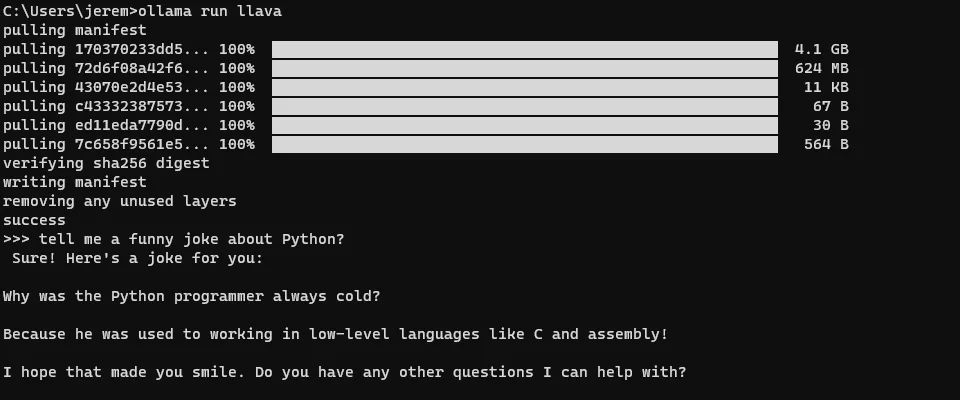

ollama run llava

And we’re up and running in no time! Quick and easy.

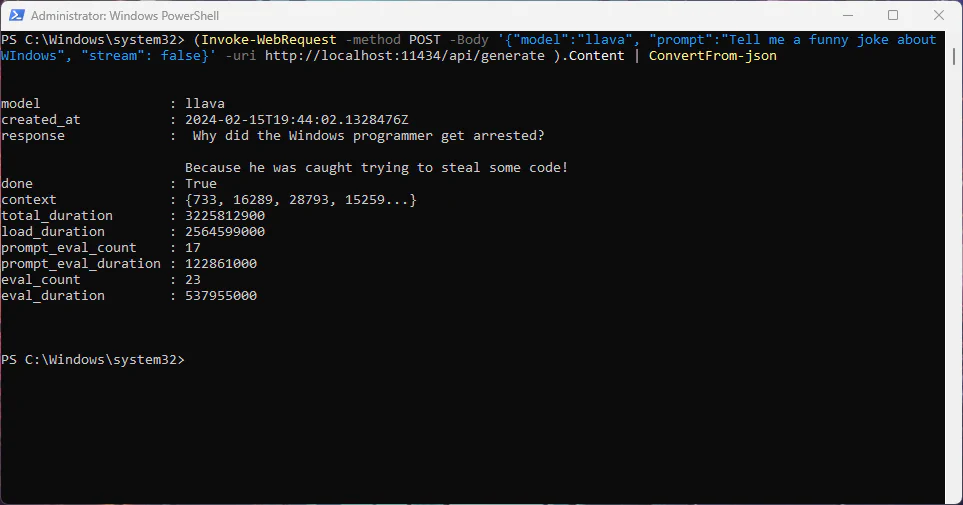

As pointed out on the Ollama blog, the API is fully open, and you can access it via PowerShell if you like:

(Invoke-WebRequest -method POST -Body '{"model":"llava", "prompt":"Tell me a funny joke about Windows?", "stream": false}' -uri http://localhost:11434/api/generate ).Content | ConvertFrom-json

The API is likely how you’ll be interacting with this application anyway, and it’s automatically loaded and ready to go.

Summary

So what do I think? It’s awesome. Let’s rate it:

- Ease of Installation - Super easy to install. I don’t know how they could have made it easier.

- Performance - On my laptop with an RTX 4060 it performs great. Just as fast as WSL or Linux on the same machine.

- Ease of Use - The auto-loading of Ollama and its interface is the same as other platforms. Easy.

I love this new update and can’t wait to spend more time with it. Why would anyone want to do this? With a native Windows installer, they’re now opening up Ollama to be used in Windows by folks who aren’t experts in Python environments and Linux. If you’re a Windows developer who wants a hassle-free, easy way to run a large local model on your machine and write some apps for it, this is an awesome way to do it. It’s also a great way to learn more about large language models as you go.

Great work, Ollama team! I can’t wait to see how people are going to use this.

What are you doing with LLMs today? Let me know! Let’s talk.

Also, if you have any questions or comments, please reach out.

Here’s a great video from the Ollama team:

Happy hacking!